In this post, we’re diving into building a straightforward gRPC reference data service for common stocks. This service will interface with a Postgres database, enabling functionalities to add and list stock entries through the gRPC.

Before diving into the specifics, ensure you’re up to speed with the setup covered in Part 1 as it lays the groundwork for the concepts and configurations we’ll build upon here.

Everything we do in this section will take place inside the ./api/ directory.

Unless otherwise specified, commands need to be run within the directory.

Adding Rust dependencies

To build the gRPC service, we’ll use tonic

and prost.

Update your Cargo.toml as follows to include these dependencies alongside the existing ones:

[dependencies]anyhow = "1.0.79"dotenvy = "0.15"prost = "0.12"prost-types = "0.12"thiserror = "1.0"tokio = { version = "1.35", features = ["rt-multi-thread", "macros"] }tonic = "0.11"tonic-reflection = "0.11"tracing = "0.1.40"tracing-subscriber = { version = "0.3", features = ["env-filter", "json"] }

[build-dependencies]tonic-build = "0.11"This will add the necessary crates for the gRPC service, such as tonic and prost.

Creating the gRPC service

The auto-generated service

Let’s kickstart our service by defining its structure in a proto file,

which will later be used to auto-generate Rust code.

Create a directory called protos and a new refdata.proto file within it:

syntax = "proto3";

import "google/protobuf/empty.proto";

package refdata;

message Stock { int32 id = 1; string symbol = 2; string name = 3;}

message Stocks { repeated Stock stocks = 1;}

service RefData { rpc AllStocks(google.protobuf.Empty) returns (Stocks); rpc AddStock(Stock) returns (google.protobuf.Empty);}The service is just two endpoints for adding a new stock and retrieving all stocks, which will need to populate the combobox of stocks in the UI.

We will also need a custom build.rs script to compile the protobuf

definitions into Rust code.

use std::path::PathBuf;use std::{env, fs};

fn main() -> Result<(), Box<dyn std::error::Error>> { let out_dir = PathBuf::from(env::var("OUT_DIR").unwrap()); let paths = fs::read_dir("./protos").unwrap(); let models: Vec<_> = paths.flatten().map(|e| e.path()).collect(); tonic_build::configure() .file_descriptor_set_path(out_dir.join("trading_descriptor.bin")) .compile(&models, &["protos"]) .unwrap(); Ok(())}Finally, and crucially, we must register the generated code as a module within our library.

Let’s create a services.rs module inside the src folder, and include the auto-generated refdata code.

pub const FILE_DESCRIPTOR_SET: &[u8] = tonic::include_file_descriptor_set!("trading_descriptor");

pub mod proto { pub mod refdata { tonic::include_proto!("refdata"); }}Make sure to also register services as a module in lib.rs by adding

pub mod services. At this point everything should compile again if you run cargo build.

Error handling

Before we go any further, let’s add a custom Result type and

error handling code that will make it a little bit easier to convert

errors between the business layer and gRPC service layer.

Add a new module for this:

use tonic::{Code, Status};use tracing::error;

#[derive(Debug, thiserror::Error)]pub enum Error { #[error("other error")] Other(#[from] anyhow::Error),}

pub type Result<T> = std::result::Result<T, Error>;

impl From<Error> for Status { fn from(err: Error) -> Self { match err { _ => { let error_message = err.to_string(); error!(error_message, "internal error: {:?}", err); Self::new(Code::Internal, error_message) } } }}This code converts various error types that may occur to the most appropriate gRPC status. It may not seem very useful just yet, but we’ll extend this to cover SQLx errors later on.

The skeleton service code

To implement the refdata service, let’s create a new module called

refdata.rs, with 3 submodules:

- refdata/models.rs will contain our data models.

- refdata/repository.rs will contain the persistence layer.

- refdata/service.rs will implement the auto-generated service interface.

There is only one simple model for stocks to put in models.rs:

pub struct Stock { pub id: i32, pub symbol: String, pub name: String,}Note: Arguably, some types could be a little better - for example the

idcould be an unsigned integer. However, SQLx maps integers in Postgres toi32and the type conversions add a significant amount of noise to the code.

The repository code is a little more interesting. We’ll use a trait to describe the desired interface, and provide a Postgres implementation with stub functions for now.

use crate::error::Result;use crate::refdata::models::Stock;

#[tonic::async_trait]pub trait RefDataRepository { async fn get_all_stocks(&self) -> Result<Vec<Stock>>; async fn add_stock(&self, symbol: &str, name: &str) -> Result<Stock>;}

pub struct PostgresRefDataRepository {}

impl PostgresRefDataRepository { pub fn new() -> Self { Self {} }}

#[tonic::async_trait]impl RefDataRepository for PostgresRefDataRepository { async fn get_all_stocks(&self) -> Result<Vec<Stock>> { todo!() }

async fn add_stock(&self, symbol: &str, name: &str) -> Result<Stock> { todo!() }}Finally, the bring it all together, the code in service.rs will

service as the bridge between the gRPC interface and the repository.

use tonic::{Request, Response, Status};

use crate::refdata::models::Stock as StockModel;use crate::refdata::repository::RefDataRepository;use crate::services::proto::refdata::ref_data_server::RefData;use crate::services::proto::refdata::{Stock, Stocks};

pub struct RefDataService<R> { repository: R,}

impl<R> RefDataService<R> { pub fn new(repository: R) -> Self { Self { repository } }}

#[tonic::async_trait]impl<R> RefData for RefDataService<R> where R: RefDataRepository + Send + Sync + 'static,{ async fn all_stocks(&self, _request: Request<()>) -> Result<Response<Stocks>, Status> { let stocks = self .repository .get_all_stocks() .await? .into_iter() .map(Into::into) .collect();

Ok(Response::new(Stocks { stocks })) }

async fn add_stock(&self, request: Request<Stock>) -> Result<Response<()>, Status> { let stock = request.get_ref(); self.repository .add_stock(&stock.symbol, &stock.name) .await?;

Ok(Response::new(())) }}

impl From<StockModel> for Stock { fn from(stock: StockModel) -> Self { Stock { id: stock.id, symbol: stock.symbol, name: stock.name, } }}The RefDataService works with any implementation of the repository.

In our case, that will be the Postgres implementation using SQLx.

Don’t forget to register the refdata module in lib.rs.

We’ll use some of these newly created types later on in main.rs,

so we need to make sure they are publicly visible.

Let’s update refdata.rs to expose what we need.

mod models;mod repository;mod service;

pub use repository::{PostgresRefDataRepository, RefDataRepository};pub use service::RefDataService;At this point, everything should compile, albeit with some warnings around unused variables and functions.

Running the service

With our refdata service implementation now compiling successfully, it’s time to build and run the gRPC server using tonic to serve the refdata service.

Configuring logging

Proper logging is crucial for debugging and monitoring our service. While a comprehensive setup for structured logging and tracing is beyond this post’s scope, we’ll configure the tracing library for improved log output.

Let’s configure the tracing library for nicer logs and replace the print statement.

So that we can control the log level, we’ll also add in a call to dotenvy.

use anyhow::Result;use tracing::info;use tracing_subscriber::layer::SubscriberExt;use tracing_subscriber::util::SubscriberInitExt;

#[tokio::main]async fn main() -> Result<()> { dotenvy::dotenv()?; setup_tracing_registry(); info!("This will be a trading application!");

Ok(())}

fn setup_tracing_registry() { tracing_subscriber::registry() .with(tracing_subscriber::EnvFilter::new( std::env::var("RUST_LOG").unwrap_or_else(|_| "info,trading_api=debug".into()), )) .with(tracing_subscriber::fmt::layer().pretty()) .init();}For dotenvy to work, add a .env file at the same level as the Cargo.toml.

If you’d like to override the log level, just set the RUST_LOG variable in .env.

RUST_LOG=infoThe tonic service

With that out of the way, the next step is to integrate it into a tonic-powered gRPC server.

Building the refdata server is straightforward. We’ll also add a reflection

service, which we’ll need for grpcui.

10 collapsed lines

use anyhow::Result;use tonic::transport::Server;use tracing::info;use tracing_subscriber::layer::SubscriberExt;use tracing_subscriber::util::SubscriberInitExt;

use trading_api::refdata::{PostgresRefDataRepository, RefDataService}; use trading_api::services::proto::refdata::ref_data_server::RefDataServer; use trading_api::services::FILE_DESCRIPTOR_SET;

#[tokio::main]async fn main() -> Result<()> { dotenvy::dotenv()?; setup_tracing_registry();

let reflection_service = tonic_reflection::server::Builder::configure() .register_encoded_file_descriptor_set(FILE_DESCRIPTOR_SET) .build()?; let refdata_service = RefDataServer::new(build_refdata_service());

let address = "0.0.0.0:8080".parse()?; info!("Service is listening on {address}");

Server::builder() .add_service(reflection_service) .add_service(refdata_service) .serve(address) .await?;

Ok(())}

fn build_refdata_service() -> RefDataService<PostgresRefDataRepository> { let repository = PostgresRefDataRepository::new(); RefDataService::new(repository) }9 collapsed lines

fn setup_tracing_registry() { tracing_subscriber::registry() .with(tracing_subscriber::EnvFilter::new( std::env::var("RUST_LOG").unwrap_or_else(|_| "info,trading_api=debug".into()), )) .with(tracing_subscriber::fmt::layer().pretty()) .init();}With this code in place, you should now be able to run the service.

cargo runUsing grpcui

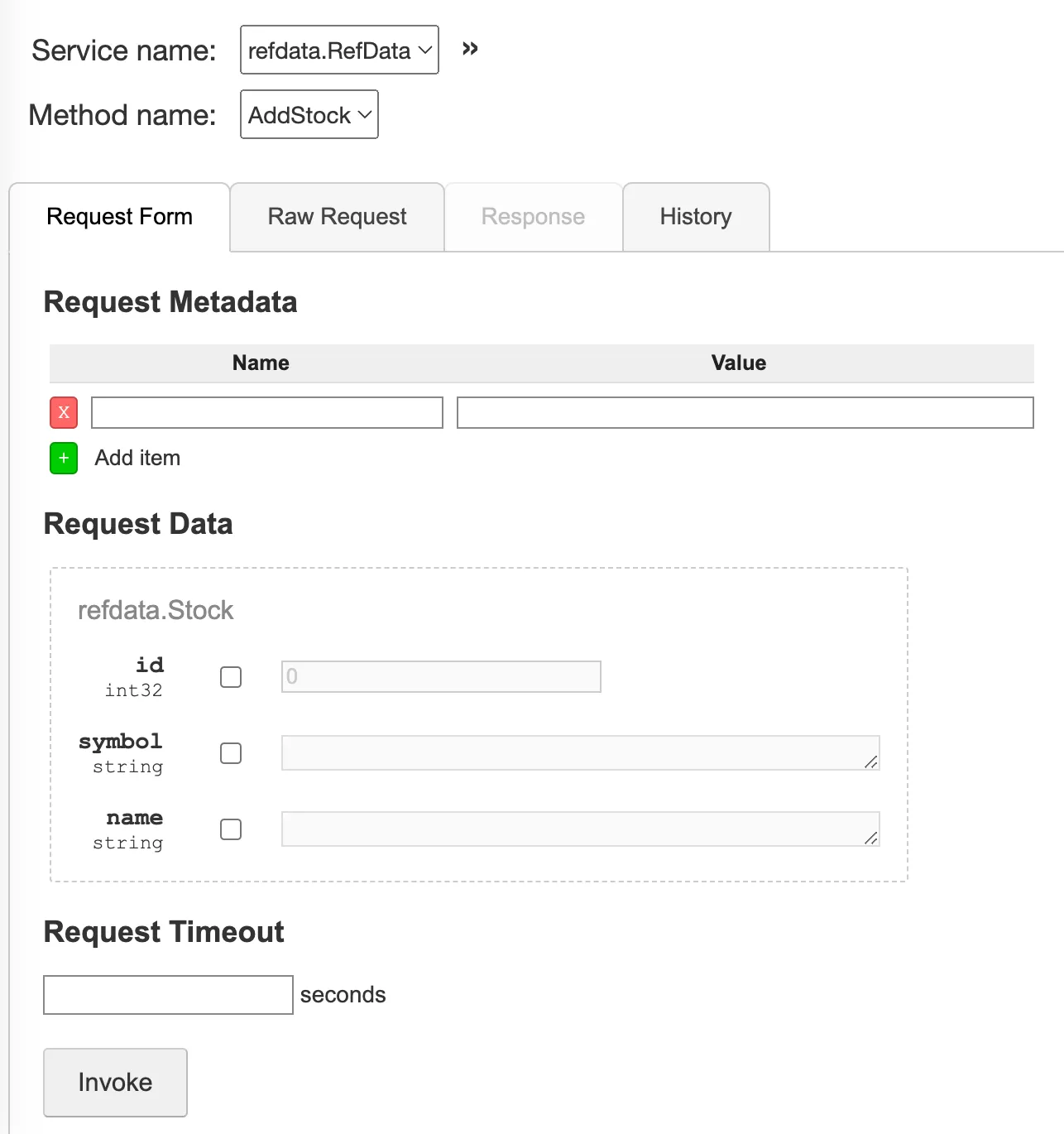

Now that our server is up and running, let’s use grpcui to explore its capabilities interactively.

This tool provides a graphical interface for testing and inspecting our gRPC services,

offering a practical way to verify our setup and functionality.

grpcui --plaintext 0.0.0.0:8080The port number needs to correspond to the port number specified for the tonic service.

grpcui should automatically open in your browser, but if not, it should also

print the port where it’s exposed on localhost.

In the UI, you should see a dropdown for the list of services, which only contains

RefData for now. Under RefData, you’ll see the methods listed.

Of course, the endpoints will fail, as our repository is just a bunch of todos, but being able to see the endpoints proves that the server is running correctly.

Conclusion

To wrap up, we’ve successfully established a foundational gRPC service for managing stock reference data, integrating essential Rust crates and setting the stage for a robust, type-safe communication layer. Although our endpoints aren’t fully functional yet, we’ve laid the groundwork for subsequent enhancements, which we’ll tackle in the next installment of this series.

The resulting code can be found in the v1-refdata-grpc branch on GitHub.

Next post - Part 3: Repository for the reference data service

David Steiner

I'm a software engineer and architect focusing on performant cloud-native distributed systems.