This is the second part in the “Serverless Rust API on AWS” series. Part 1 gave an overview of the Rust ecosystem for serverless development and general considerations for picking the tools and libraries used in this post. We discussed why Rust is an excellent choice for building efficient, reliable APIs. If you want to dive straight into coding, feel free to skip the first part.

In this post, we’ll build a Poem API with a basic CRUD interface for managing currencies. For now, the focus will be on local development, and we’ll avoid using any AWS components. Part 3 will demonstrate the ease with which the service we’re implementing here can be migrated to AWS Lambda.

The final code for Part 2 is hosted on GitHub.

Initial setup

Starting with an empty repository, let’s add the following

Cargo.toml.

[package]name = "serverless-rust-api"version = "0.1.0"edition = "2021"

[[bin]]name = "local"path = "src/bin/local.rs"

[dependencies]anyhow = "^1.0.86"async-trait = "^0.1"envy = "^0.4"poem = "^3.0"poem-lambda = "^5.0"poem-openapi = { version = "^5.0", features = ["swagger-ui"] }serde = { version = "^1.0", features = ["derive"] }thiserror = "^1.0"tokio = { version = "^1.38", features = ["full"] }tracing = "^0.1"tracing-subscriber = { version = "^0.3", features = ["json", "env-filter"] }

[dev-dependencies]poem = { version = "^3.0", features = ["test"] }The project will consist of a library, which contains the bulk of the code,

and eventually two entrypoints, /src/bin/local.rs and src/bin/serverless.rs,

for local development and a Lambda-compatible entrypoint respectively.

For now, we only need the local implementation, so ignore serverless.rs for now.

Create a blank src/lib.rs file and dummy main function in src/bin/local.rs.

use anyhow::Result;

#[tokio::main]async fn main() -> Result<()> { Ok(())}With the 3 files in place, the project should compile fine when

you run cargo build --bin local.

A note on dependencies

In case you aren’t familiar with some of the crates listed in the

Cargo.toml, here is a quick description of them.

Async runtime and tracing

Tokio probably requires no introduction. We’ll use it as our async runtime. tracing is part of the tokio system. Along with tracing-subscriber, we’ll us it for structured logging and instrumentation.

Async traits

async-trait is to provide async support for traits.

Even though, support for async functions exists in stable Rust since

late 2023, it has limitations. async-trait is still required

for dyn traits with async functions.

Web framework

Poem is our web framework of choice. It provides Lambda

integration through the poem-lambda crate,

and OpenAPI support through the poem-openapi crate.

We’ll enable the test feature in development, which provides a

test client we can use to implement API tests.

Error handling

The anyhow and thiserror crates are used to make error handling more convenient. If you aren’t already familiar with these crates, I recommend checking them out, as they are pretty pervasive in the Rust ecosystem.

Serialisation

A perhaps even more pervasive crate is serde. We’ll use it for type-safe serialisation and deserialisation.

Configuration

envy is a crate for deserialising environment variables into serde types. We’ll use it for configuration passed into Lambda as environment variables, as well as configuration for local development.

The repository

Before we start working on the API layer, let’s implement a repository suitable for storing and retrieving currencies.

We’ll use DynamoDB for persistence in the final implementation. However, for local development and automated testing, decoupling from a real AWS environment can be beneficial. We have two options to do so:

- A local DynamoDB database using the official Docker image.

- An abstract repository with an implementation which replaces real database actions.

For local development, I prefer keeping things as close to the real solution as possible. DynamoDB tables are practically free at low traffic levels, making it often a good idea to create real tables on DynamoDB for development purposes. Using a local DynamoDB instance is an equally valid approach, offering a balance between realism and convenience.

For automated testing, I recommend going with an “offline” option. A local DynamoDB instance works best for integration tests and other deeper styles of testing, which I find the most valuable. However, there’s also value in shallower API tests where we don’t care about the persistence layer. In such cases, an in-memory repository implementation may be more appropriate, offering faster test execution and easier setup.

For this demo project, we only need a very basic key-value store for currencies. Creating an abstraction layer for the repository along with an in-memory implementation is trivial and provides flexibility. For the sake of complete decoupling from AWS and to demonstrate the power of abstraction, let’s build a version with in-memory storage. This approach allows us to easily switch between different storage implementations (like DynamoDB or in-memory) without changing our application logic.

The abstract repository

Let’s create a repository (src/repository.rs) module and register it as a public module in src/lib.rs.

Create 3 sub-modules inside src/repository:

| Module | Path | Purpose |

|---|---|---|

base | /src/repository/base.rs | The base trait and the Currency struct. |

memory | /src/repository/memory.rs | The in-memory implementation of the repository. |

Here is the base repository module:

use serde::{Deserialize, Serialize};use std::sync::Arc;

pub type SharedRepository = Arc<dyn Repository>;

#[derive(Clone, Debug, Deserialize, Serialize)]pub struct Currency { pub code: String, pub name: String, pub symbol: String,}

#[async_trait::async_trait]pub trait Repository: Sync + Send + 'static { async fn add_currency(&self, currency: Currency) -> crate::error::Result<Currency>; async fn get_currency(&self, code: &str) -> crate::error::Result<Currency>; async fn delete_currency(&self, code: &str) -> crate::error::Result<Currency>;}The repository trait defines the necessary API to manage currencies.

The Currency struct encapsulates the information we store about a particular

currency. For example, British Pounds may be stored as

let gbp = Currency { code: "GBP".to_string(), name: "British Pounds".to_string(), symbol: "£".to_string(),}Note, we have also added a SharedRepository = Arc<dyn Repository>.

This is a cloneable, Sync and Send type which we can eventually pass to Poem with either

the DynamoDB or the in-memory repository contained inside it.

The in-memory repository

The in-memory repository uses a HashMap wrapped in a RwLock

for thread-safe concurrent access:

use std::collections::HashMap;use tokio::sync::RwLock;

use crate::error::Error;use crate::repository::{Currency, Repository};

pub struct InMemoryRepository { storage: RwLock<HashMap<String, Currency>>,}

impl Default for InMemoryRepository { fn default() -> Self { Self { storage: RwLock::new(HashMap::new()), } }}

#[async_trait::async_trait]impl Repository for InMemoryRepository { async fn add_currency(&self, currency: Currency) -> crate::error::Result<Currency> { let mut storage = self.storage.write().await; storage.insert(currency.code.to_lowercase(), currency.clone());

Ok(currency) }

async fn get_currency(&self, code: &str) -> crate::error::Result<Currency> { let storage = self.storage.read().await; storage .get(&code.to_lowercase()) .cloned() .ok_or(Error::NotFound(code.to_string())) }

async fn delete_currency(&self, code: &str) -> crate::error::Result<Currency> { let mut storage = self.storage.write().await; storage .remove(&code.to_lowercase()) .ok_or(Error::NotFound(code.to_string())) }}For the use paths to be correct, make sure your src/repository.rs

re-exposes the required types:

mod base;mod memory;

pub use base::{Currency, Repository, SharedRepository};pub use memory::InMemoryRepository;Error handling

You may be wondering what the Result and Error type we’re returning from

these functions are.

We use thiserror to implement a custom error type in src/error.rs:

use thiserror::Error;

#[derive(Debug, Error)]pub enum Error { #[error("item {0} was not found")] NotFound(String), #[error("unknown error")] Other,}

pub type Result<T> = std::result::Result<T, Error>;Register this new error module in lib.rs as a private module.

Even though our use-case is trivial, this error type provides a foundation for more complex error scenarios as the application grows.

At this point, everything should compile again.

The OpenAPI service

Now that we have a repository with functionality to store currencies,

let’s define the OpenAPI service. We’ll implement an api

module with 2 sub-modules, payload for the OpenAPI types and

endpoints for the API endpoints.

The OpenAPI types

We’ll create the OpenAPI type definitions using poem-openapi

in src/api/payload.rs.

use poem_openapi::payload::{Json, PlainText};use poem_openapi::{ApiResponse, Object};

use crate::error::{Error, Result};

#[derive(Object)]pub struct Currency { pub code: String, pub name: String, pub symbol: String,}

impl From<crate::repository::Currency> for Currency { fn from(currency: crate::repository::Currency) -> Self { Self { code: currency.code, name: currency.name, symbol: currency.symbol, } }}

#[derive(ApiResponse)]pub(crate) enum Response { #[oai(status = 200)] Currency(Json<Currency>),

#[oai(status = 404)] NotFound(PlainText<String>),

#[oai(status = 500)] InternalServerError(PlainText<String>),}

impl From<Result<crate::repository::Currency>> for Response { fn from(result: Result<crate::repository::Currency>) -> Self { match result { Ok(currency) => Response::Currency(Json(currency.into())), Err(err) => match err { Error::NotFound(code) => { let msg = format!("{code} is not found"); Response::NotFound(PlainText(msg)) } Error::Other => { let msg = "internal server error".to_string(); Response::InternalServerError(PlainText(msg)) } }, } }}While there’s some duplication in defining Currency again for OpenAPI, maintaining a clear separation between persistence and API layer types is beneficial. As applications grow, differences often emerge between these representations, so it’s best to separate them early. Rust’s type system ensures these types remain compatible.

We’ve also used a From implementation to map our custom error

variants to HTTP responses with appropriate status codes

and messages.

The endpoints

Now let’s add the API endpoints that interact with the repository in src/api/endpoints.rs:

use poem::web::Data;use poem_openapi::param::Path;use poem_openapi::payload::Json;use poem_openapi::OpenApi;use tracing::info;

use crate::api::payload::{Currency, Response};use crate::repository;

pub struct CurrencyApi;

#[OpenApi]impl CurrencyApi { #[oai(path = "/currencies", method = "post")] async fn create( &self, Data(repository): Data<&repository::SharedRepository>, Json(payload): Json<Currency>, ) -> Response { info!(code = payload.code, "creating currency");

let currency = repository::Currency { code: payload.code, name: payload.name, symbol: payload.symbol, }; repository.add_currency(currency).await.into() }

#[oai(path = "/currencies/:code", method = "get")] async fn get( &self, Data(repository): Data<&repository::SharedRepository>, Path(code): Path<String>, ) -> Response { info!(code, "getting currency"); repository.get_currency(&code).await.into() }

#[oai(path = "/currencies/:code", method = "delete")] async fn delete( &self, Data(repository): Data<&repository::SharedRepository>, Path(code): Path<String>, ) -> Response { info!(code, "deleting currency"); repository.delete_currency(&code).await.into() }}This layer is a thin wrapper around the repository.

The function arguments use Poem’s extractors (Data, Json, and Path)

to access request information and context in a type-safe manner:

Datapasses arbitrary objects (like our repository) to handlers.Jsonautomatically deserializes JSON payloads into Serde types.Pathextracts and parses path parameters.

The service

To build a service from CurrencyApi, let’s add a new function

in src/api.rs to register an OpenAPI service at the /api route:

mod endpoints;mod payload;

use poem::{Endpoint, EndpointExt, Route};use poem_openapi::OpenApiService;

use crate::api::endpoints::CurrencyApi;use crate::repository::SharedRepository;

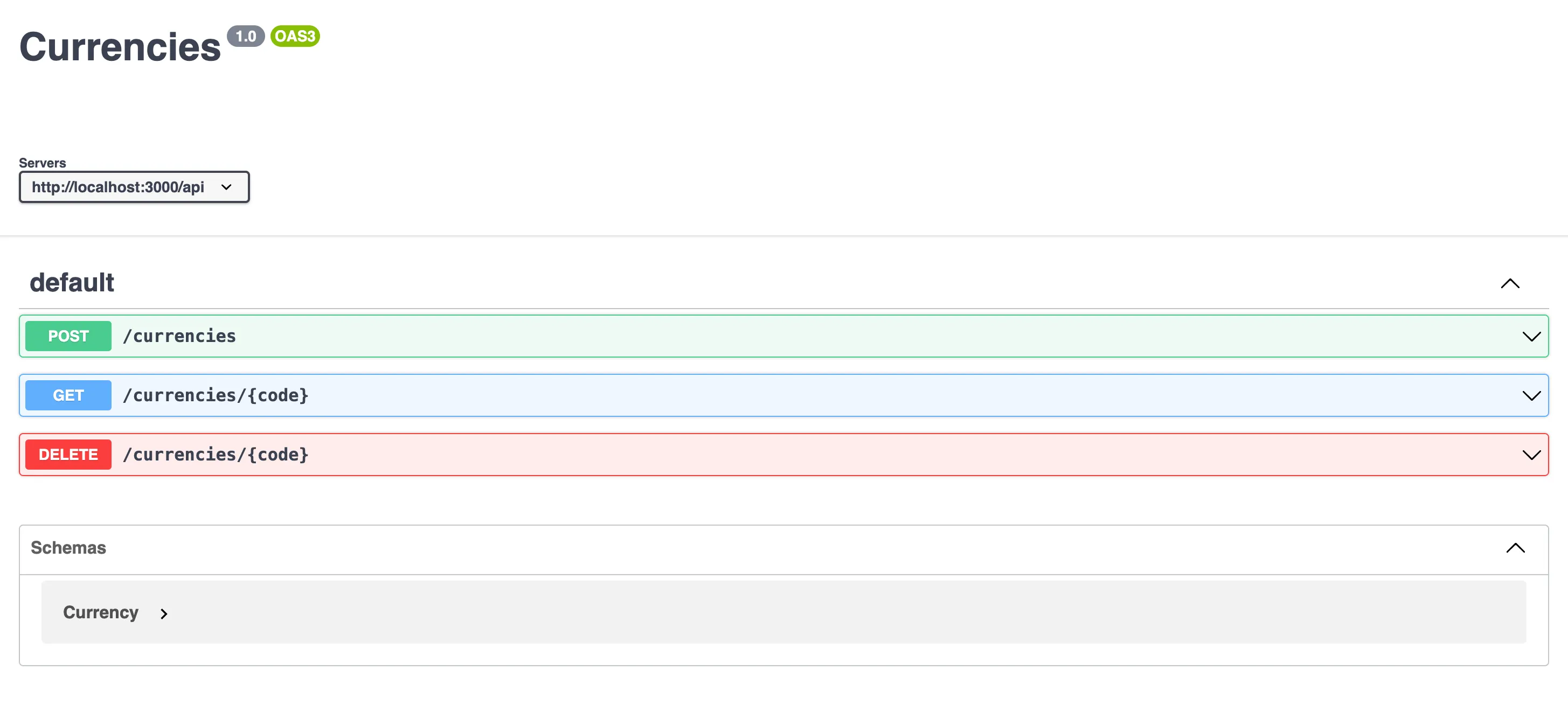

pub fn build_app(repository: SharedRepository) -> anyhow::Result<impl Endpoint> { let api_service = OpenApiService::new(CurrencyApi, "Currencies", "1.0").server("http://localhost:3000/api"); let ui = api_service.swagger_ui(); let app = Route::new() .nest("/api", api_service) .nest("/", ui) .data(repository);

Ok(app)}Poem provides solutions for displaying the OpenAPI spec using

Swagger,

Redoc,

or RapiDoc UI.

In this example, we set up the application to serve Swagger UI at the / path.

To demonstrate the test utilities provided by Poem, let’s throw in a few unit tests as well:

20 collapsed lines

mod endpoints;mod payload;

use poem::{Endpoint, EndpointExt, Route};use poem_openapi::OpenApiService;

use crate::api::endpoints::CurrencyApi;use crate::repository::SharedRepository;

pub fn build_app(repository: SharedRepository) -> anyhow::Result<impl Endpoint> { let api_service = OpenApiService::new(CurrencyApi, "Currencies", "1.0").server("http://localhost:3000/api"); let ui = api_service.swagger_ui(); let app = Route::new() .nest("/api", api_service) .nest("/", ui) .data(repository);

Ok(app)}

#[cfg(test)]mod tests { use poem::http::StatusCode; use poem::test::TestClient; use poem::Endpoint; use std::sync::Arc;

use crate::api::build_app; use crate::repository::{Currency, InMemoryRepository};

fn setup_client() -> TestClient<impl Endpoint> { let repository = Arc::new(InMemoryRepository::default()); let app = build_app(repository).expect("app to be created successfully"); TestClient::new(app) }

#[tokio::test] async fn test_get_currency() { let client = setup_client(); let ccy = Currency { code: "GBP".to_string(), name: "British Pound".to_string(), symbol: "£".to_string(), }; let response = client.post("/api/currencies").body_json(&ccy).send().await; response.assert_status_is_ok();

let response = client.get("/api/currencies/gbp").send().await; response.assert_status_is_ok(); response.assert_json(ccy).await; }

#[tokio::test] async fn test_get_non_existent() { let client = setup_client(); let response = client.get("/api/currencies/eur").send().await; response.assert_status(StatusCode::NOT_FOUND); }

#[tokio::test] async fn test_create_then_delete() { let client = setup_client(); let ccy = Currency { code: "GBP".to_string(), name: "British Pound".to_string(), symbol: "£".to_string(), }; let response = client.post("/api/currencies").body_json(&ccy).send().await; response.assert_status_is_ok();

let response = client.delete("/api/currencies/gbp").send().await; response.assert_status_is_ok(); }

#[tokio::test] async fn test_delete_non_existent() { let client = setup_client(); let response = client.delete("/api/currencies/eur").send().await; response.assert_status(StatusCode::NOT_FOUND); }}These tests use the in-memory repository for persistence, demonstrating how to test the API endpoints effectively.

Running the service locally

The final step before we can run the service is a main function

that builds and starts the application.

If you followed the initial setup steps, you should already have an

entry in Cargo.toml for the local entrypoint:

[package]name = "serverless-rust-api"version = "0.1.0"edition = "2021"

[[bin]]name = "local"path = "src/bin/local.rs"

22 collapsed lines

[[bin]]name = "serverless"path = "src/bin/serverless.rs"

[dependencies]anyhow = "^1.0.86"async-trait = "^0.1"aws-config = "^1.5"aws-sdk-dynamodb = "^1.36"envy = "^0.4"poem = "^3.0"poem-lambda = "^5.0"poem-openapi = { version = "^5.0", features = ["swagger-ui"] }serde = { version = "^1.0", features = ["derive"] }serde_dynamo = { version = "^4.2", features = ["aws-sdk-dynamodb+1"] }thiserror = "^1.0"tokio = { version = "^1.38", features = ["full"] }tracing = "^0.1"tracing-subscriber = { version = "^0.3", features = ["json", "env-filter"] }

[dev-dependencies]poem = { version = "^3.0", features = ["test"] }This will contain the main function which sets up the service for local development.

Let’s add the following content to src/bin/local.rs:

use anyhow::Result;use poem::listener::TcpListener;use std::sync::Arc;

use serverless_rust_api::api::build_app;use serverless_rust_api::repository::InMemoryRepository;

#[tokio::main]async fn main() -> Result<()> { tracing_subscriber::fmt().pretty().init(); let repository = Arc::new(InMemoryRepository::default()); let app = build_app(repository)?; poem::Server::new(TcpListener::bind("0.0.0.0:3000")) .run(app) .await?;

Ok(())}This setup does the following:

- Initialises a pretty-printed logging system for better readability during development.

- Creates an in-memory repository for storing currency data.

- Builds the application using the build_app function we defined earlier.

- Starts a server that listens on all interfaces (0.0.0.0) on port 3000.

You can now run the application using Cargo:

cargo run --bin localThis command will compile and start your local development server. You should see output indicating that the server has started, and it will be accessible at http://localhost:3000.

To test the API

Open a web browser and navigate to http://localhost:3000 to access the Swagger UI. Use the Swagger UI to interact with your API endpoints, or use tools like curl or Postman to send HTTP requests to http://localhost:3000/api/currencies. Remember that this local setup uses an in-memory repository, so any data you add will be lost when you stop the server.

Conclusion

In this tutorial, we’ve successfully built a robust foundation for a serverless Rust API using the Poem framework. Our implementation includes:

- A well-structured API for managing currency data.

- OpenAPI documentation with Swagger UI integration.

- Error handling and custom error types.

- An in-memory repository for local development and testing.

- A modular design that separates concerns and allows for easy extension.

While our current implementation runs entirely locally, it serves as an excellent starting point for a cloud-native application. The modularity of our design, particularly the use of trait-based repositories, sets us up for a smooth transition to cloud-based services.

We have implemented a simple API using Poem for currencies. So far, no AWS components have been used, the implementation is entirely local.

The final code for Part 2 is hosted on GitHub.

In Part 3, we’ll dive into AWS integration and serverless deployment strategies. These additions will elevate our Rust API to a production-ready, cloud-native application capable of handling real-world scenarios and scaling to meet demand.

David Steiner

I'm a software engineer and architect focusing on performant cloud-native distributed systems.